Our pipeline starts with reading a medical dataset, for which then one or multiple so-called presets are selected by the user. A preset includes the transfer function setting as well as material classifications and fixed clip planes that are used to reveal certain anatomical structures. For each preset, a set of views capturing all potentially seen structures in the data at varying resolution are computed. In this way, also structures which are not seen when generating images with camera positions on a surrounding sphere are recovered in the final object representation.

These views are handed over to a physically-based renderer, i.e., a volumetric path tracer, which renders one image for every view using the corresponding preset. Once all images for a selected preset have been rendered, 3DGS is used to generate a set of 3D Gaussians with shape and appearance attributes so that their rendering matches the given images. Once the Gaussians are optimized via differentiable rendering, they are compressed using sensitivity-aware vector quantization and entropy encoding.

The final compressed 3DGS representation is rendered with WebGPU using GPU sorting and rasterization of projected 2D splats, with a pixel shader that evaluates and blends the 2D projections in image space.

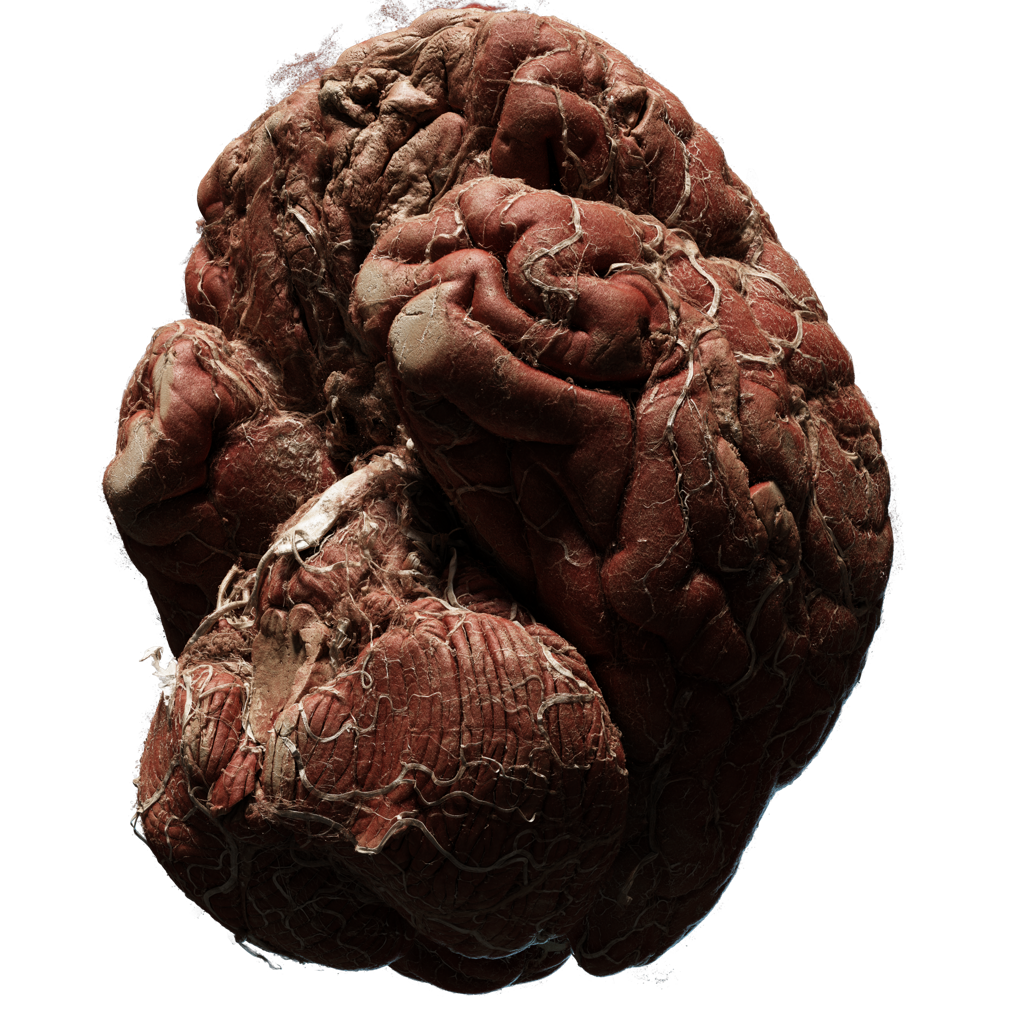

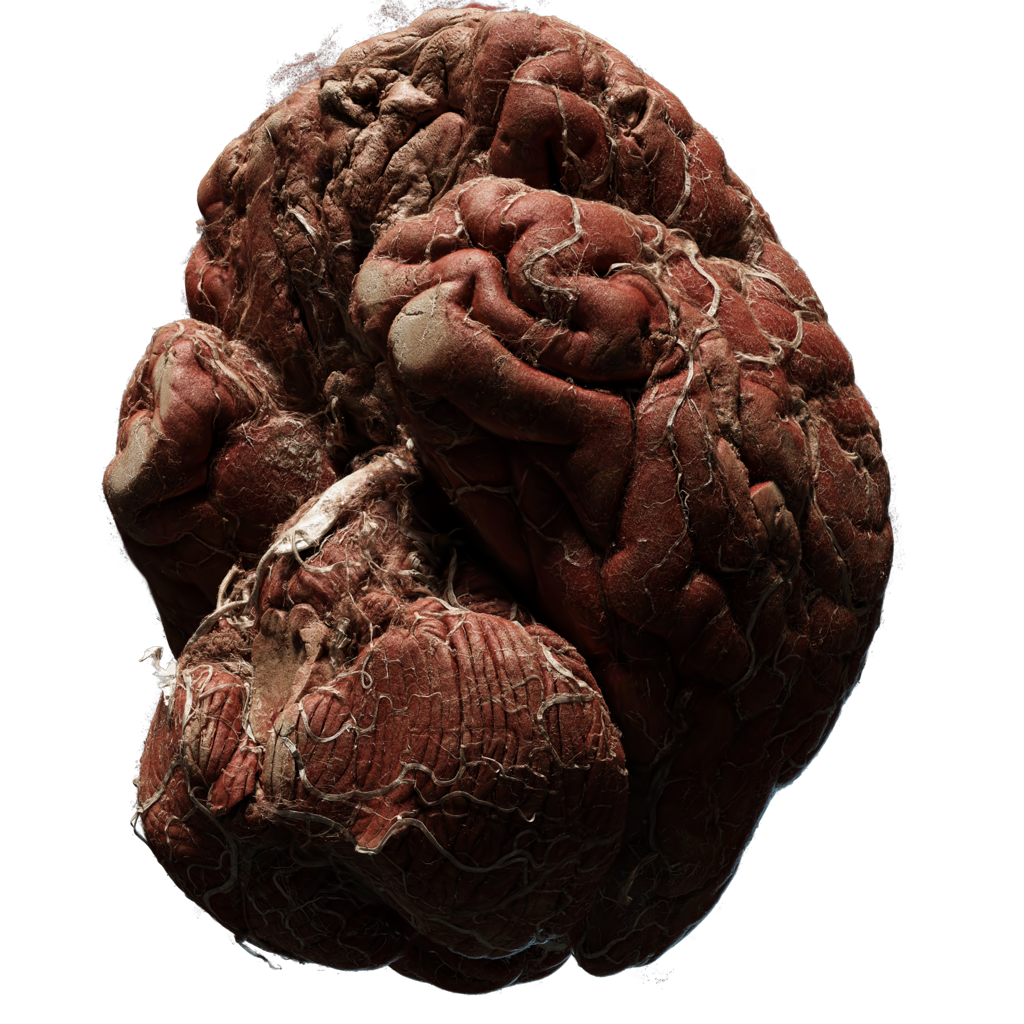

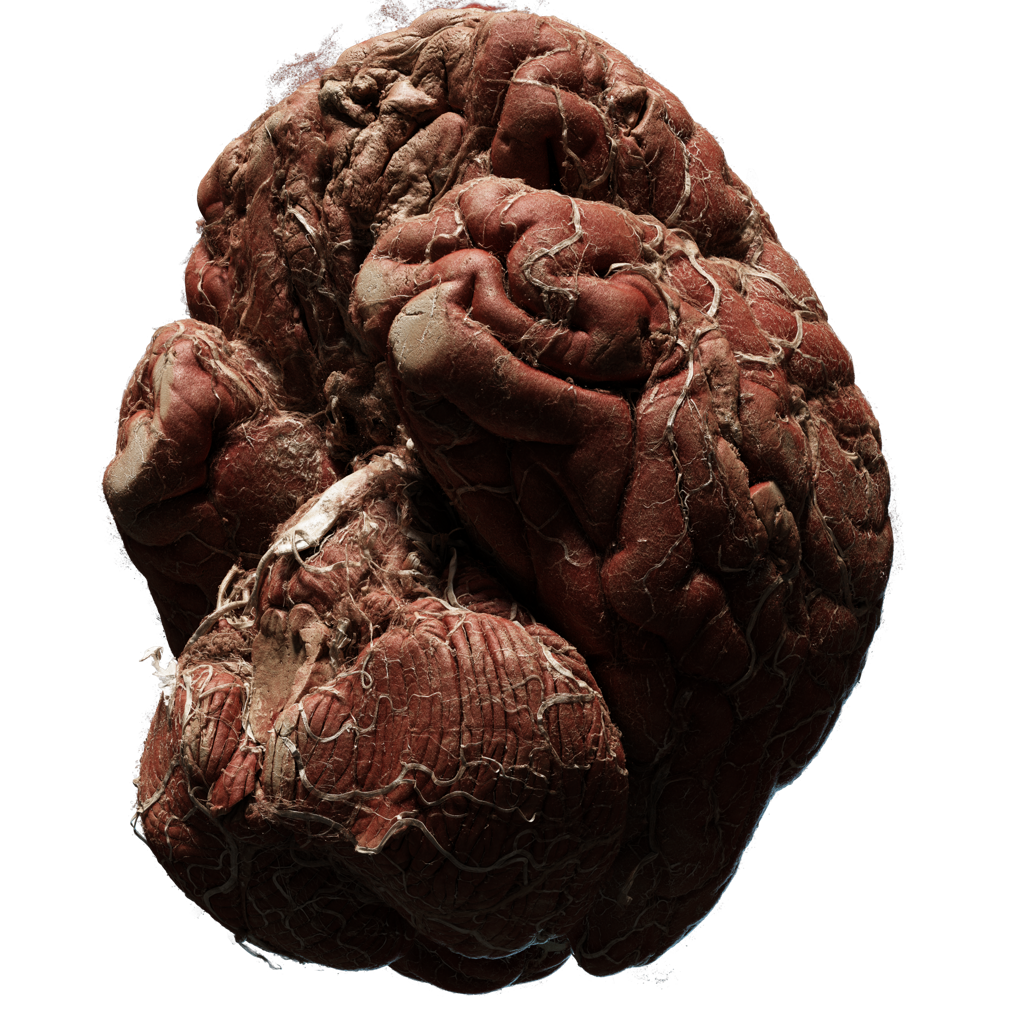

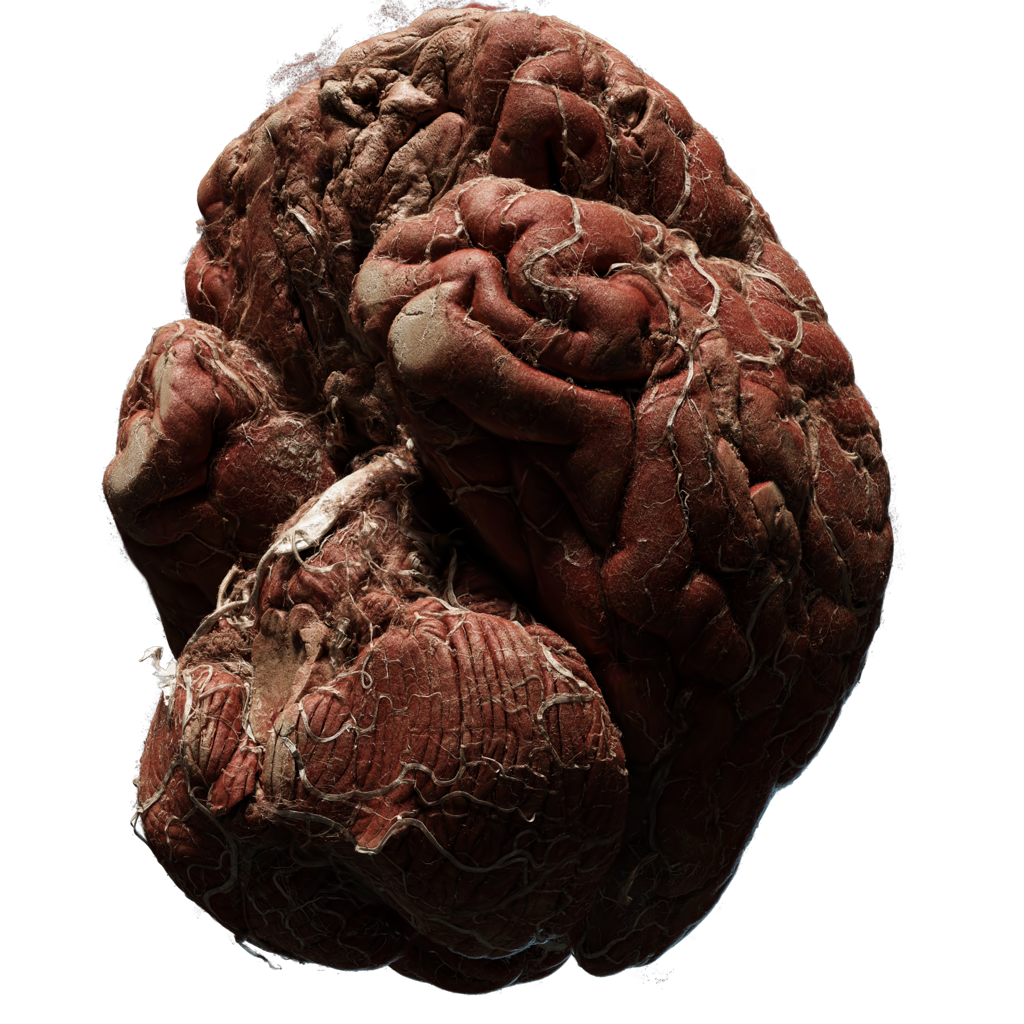

Image comparisons for the path traced images and our reconstruction. All images are from the test set.

@misc{niedermayr2024novel,

title={Application of 3D Gaussian Splatting for Cinematic Anatomy on Consumer Class Devices},

author={Simon Niedermayr and Christoph Neuhauser and Kaloian Petkov and Klaus Engel and Rüdiger Westermann},

year={2024},

eprint={2404.11285},

archivePrefix={arXiv},

primaryClass={cs.GR}

}